We at Digiquads are on a mission to help 100 Businesses to boost their Online Sales by at least 30% in this Financial Year. Just wanted to know if it is Yours then mail us your requirement.

PS: Our eCommerce Division has seen some tremendous growth, so wanted to share the principles, methods, practices (and secrets) that you can use to sky rocket your eCommerce Business. If you have any query, do leave a comment to this blog or write us to hello@digiquads.com.

Digiquads Mission Statement

What it is, how it works, what it means for search & what are its benefits.

Google said its latest major search update, the introduction of the BERT algorithm, would help it better understand the purpose behind the search queries of users, which should result in more relevant results. BERT would influence 10% of searches, the company said, which means it is likely to affect the organic exposure and traffic of your product.

When was BERT introduced in Google Search?

BERT was introduced in Google’s search system on the week of October 21, 2019 for English-language queries, including featured snippets.

The algorithm will extend to all the languages in which Search is provided by Google, but there is no set timeline yet, said Danny Sullivan of Google. A BERT template is also being used to improve featured snippets in two dozen countries.

BERT algorithm is showing impact across websites.

What is BERT?

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a pre-training technique focused on a neural network for natural language processing. BERT may be considered as an algorithmic update among SEOs, but actually it is more “the application of a multi-layer system that understands polysemous complexity and is better able to solve co-references about” stuff “in natural language by continuously fine-tuning through self-learning.

BERT’s entire purpose is to enhance the understanding of human language for machines. In a search context, this could be written or spoken questions issued by search engine users, and in the content search engines gather and index. BERT in quest is mostly about overcoming natural language linguistic ambiguity. BERT provides a text-cohesion that often comes from small details in a phrase that provides structure and meaning.

BERT is not an algorithmic update such as Penguin or Panda because BERT does not judge web pages either negatively or positively, but for Google search it enhances the understanding of human language. As a result, Google knows much more about the nature of the content on the pages it includes as well as the user questions concerns taking into consideration the full context of term.

What is a Neural Network?

Neural algorithm networks are designed to recognize patterns, to put it quite simply. For neural networks, categorizing object information, recognizing handwriting and even forecasting patterns in financial markets are typical real-world applications— not to mention search applications such as click models.

What is Natural Language Processing?

Natural language processing (NLP) refers to an artificial intelligence branch dealing with linguistics in order to enable computers to understand how people interact naturally.

Examples of advances made possible by NLP include social tools, chatbots, and word suggestions on your mobiles.

In and of itself, NLP is not a new search engine technology. However, BERT NLP represents development in NLP by bidirectional learning (more on that below).

BERT Model: How does BERT works?

BERT’s advance is in its ability to train language models in a sentence or question based on the whole set of words (bidirectional learning) rather than the conventional way of training on the ordered sequence of words (left-to-right or mixed left-to-right and right-to-left).

BERT helps the language model to learn word’s meaning based on surrounding words instead of just the word immediately preceding and following it. Google calls BERT “deeply bidirectional” because it begins “from the very bottom of a deep neural network.”

“For example, the word ‘ bank ‘ in ‘ bank account ‘ and ‘ bank of the river ‘ would have the same context-free representation. Contextual models then produce a representation of each word based on the other terms in the sentence. For example, a unidirectional contextual model will represent ‘ bank ‘ based on ‘ I accessed the ‘ but not ‘ account ‘ in the term ‘ I accessed the bank account. ‘Whereas, BERT represents ‘ bank ‘ using both its previous and next context — ‘ I accessed the … account. ‘

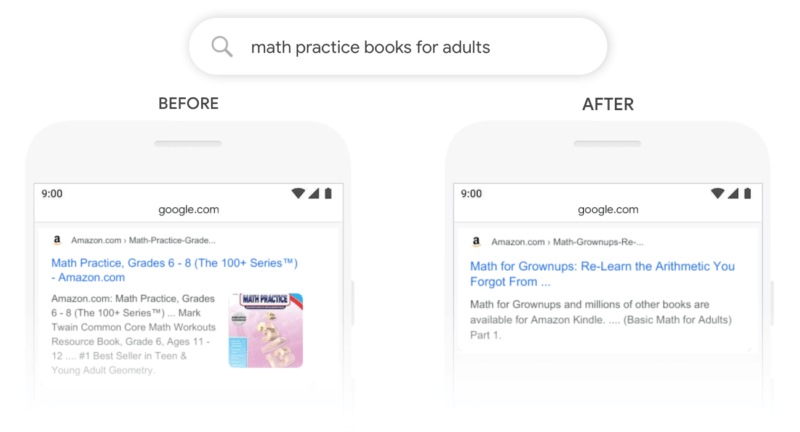

Google has shown many examples of how outcomes can be influenced by the BERT method in Search. In one example, at the top of the organic results, the question “math practice books for adults” previously surfaced a listing for a Grade 6–8 book. With BERT being introduced, Google is putting a listing at the top of the results for a book called “Math for Grownups.”

Using BERT, a search result updates & reflects understanding the current query. The content of young adults is not penalized, but the adult-specific listings are deemed to be better aligned with the purpose of the searcher.

BERT Benefits: How BERT is likely to refine Google Search?

BERT serves a multitude of purposes

Google BERT is likely what might be considered as a form of tool for Google Search by the Swiss army knife.

BERT provides a solid linguistic framework for Google’s quest to continuously modify and change weights and parameters as there are many different types of tasks that could be performed to understand natural language.

Tasks may include:

- Coreference resolution (keeping track of who, or what, a phrase or sentence refers to in context or detailed conversational query)

- Polysemy resolution (dealing with vague nuance)

- Homonymous resolution (dealing with words that sound the same but mean different things).

- Named entity determination (understanding which, from a number of named entities, the text relates to the fact that named entity recognition is not named entity determination or disambiguation) or one of many other tasks.

- Text entailment (next sentence prediction)

BERT is going to be huge for conversational search & assistant

While Google’s in-practice system continues to teach itself with more questions and sentence pairs, anticipate a quantum leap forward in terms of relevance matching conversational search.

Such quantum leaps are likely to occur not only in the English language, but very soon, also in foreign languages, as there is a feed-forward learning dimension within BERT that appears to be moving to other languages.

BERT would probably help Google expand its conversational search

Expect a quantum leap forward in application of voice search over the short to medium term; however, as the heavy lifting of building up the language comprehension held back by the manual process of Pygmalion could no longer be possible.

The earlier cited 2016 Wired article concluded with an AI concept of automatic, unsupervised learning capable of replacing Google Pygmalion and providing a scalable approach to neural network training:

“This is when machines learn from unlabelled data – massive amounts of digital information culled from the internet and other sources.” (Wired, 2016)

It sounds like Google BERT.

Pygmalion even created featured snippets.

While it is uncertain if BERT would affect the role and workload of Pygmalion, or whether featured snippets will be created in the same way as before, Google has confirmed that BERT will be used for featured snippets and is pre-trained on a broad text corpus.

However, the BERT-type foundation’s self-learning nature, which constantly feeds questions and seeks answers and featured snippets, can naturally move the learning forward and become even more advanced.

Consequently, BERT can deliver a potentially massively flexible alternative to Pygmalion’s laborious work.

International SEO can also benefit dramatically

One of BERT’s major impacts could be on international search as the BERT pick-ups in some language tend to have some transferable quality to other languages and domains as well.

Out of the bag, BERT tends to have some multi-lingual properties somehow derived from a monolingual (single language) corpora and then expanded in the form of M-BERT (Multilingual BERT) to 104 languages.

Pires, Schlinger & Garrette’s paper tested Multilingual BERT’s multilingual capabilities and found it “surprisingly good at zero-shot cross-lingual template translation” (Pires, Schlinger & Garrette, 2019). This is almost like knowing a language you’ve never seen before, because zero-shot learning aims at helping machines categorize things they’ve never seen before.

Question & Answers

Questioning and answering directly in SERPs is likely to continue to be more effective, leading to a further reduction in clicking through the pages.

Similarly, MSMARCO is used for fine tuning and is a real database of human questions and answers from Bing users, Google will probably continue to fine-tune its template of real-life search over time by real-user human queries and feeding forward learning responses.

As language continues to be understood, Google BERT’s improved understanding of paraphrases may also have an impact on related queries in “People also ask.”

BERT was built to understand natural language so that it remains natural. We must continue to create compelling, engaging, insightful and well-structured content and frameworks for people in the same way that you would compose and build sites.

On the search engine side of things, the changes are a positive rather than a negative. Google has clearly improved its understanding of the conceptual framework created by text unity in combined sentences and phrases, and is gradually improving its understanding of nuances as self-learned by BERT.

Search engines still have a long way to go and BERT is just part of that change along the way, particularly as the meaning of a word is not the same as search engine user’s context, or sequential information needs that are much more challenging issues.

SEOs still have a lot of work to do to help search engine users find their way and guide them at the right time to meet the right information needs.